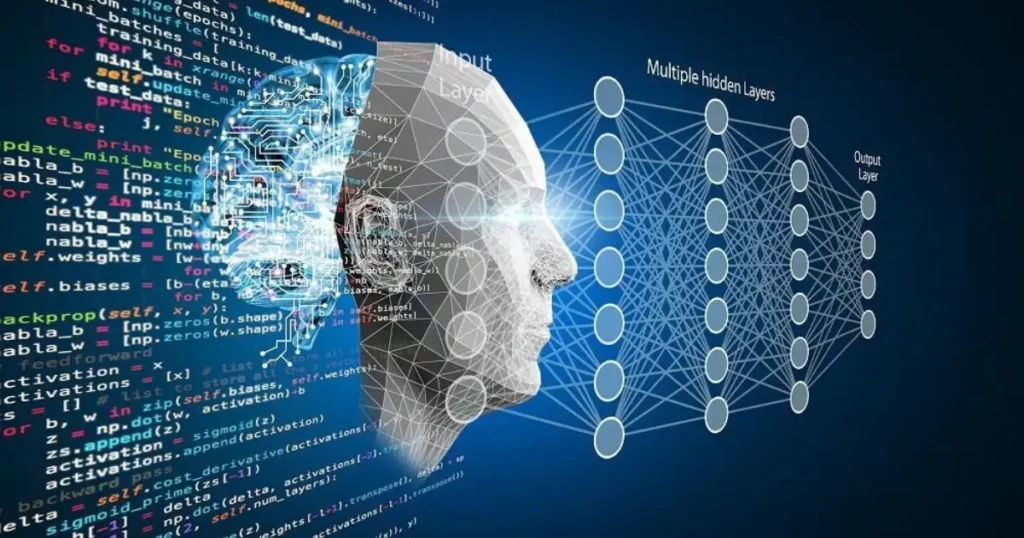

Deep learning algorithms are key to modern artificial intelligence, enabling machines to recognize patterns and make smart decisions. They power applications like image recognition, speech processing, language translation, and self-driving cars, transforming industries such as healthcare and finance.

Understanding the role of deep learning algorithms in AI is essential, as different models serve specific purposes—CNNs for images, RNNs for sequences, GANs for content creation, and transformers for language processing. By exploring these algorithms, their functions, and real-world applications, we can see how they continue to shape the future of AI.

Convolutional Neural Networks (CNNs) – The Backbone of Image Processing

Convolutional Neural Networks (CNNs) are powerful tools for deep learning, especially for visual data. Unlike regular neural networks, CNNs use unique layers called convolutional layers to find patterns such as edges, textures, and objects in images. This makes them very effective for tasks like image classification, facial recognition, medical imaging, and self-driving cars.

How CNNs Work: Convolution and Pooling Layers

CNNs process images through multiple layers. The convolutional layer scans the image with small filters to extract essential features like edges and shapes. The pooling layer then reduces the size of the feature maps, making the model more efficient while retaining key information. These layers demonstrate the role of deep learning in AI, as they enable CNNs to recognize complex patterns with high accuracy and support advanced computer vision applications.

Applications of CNNs in AI

CNNs power many real-world AI applications. They are widely used in facial recognition systems like Face ID, medical diagnostics to detect diseases from X-rays, and autonomous vehicles for object detection. In healthcare, CNNs have shown remarkable potential—researchers at Stanford University Medical developed a CNN model trained on 130,000 skin disease images, achieving dermatologist-level accuracy in detecting skin cancer. This advancement highlights how CNNs can improve diagnostic precision and accessibility. Beyond healthcare, they also enhance video analysis, security surveillance, and augmented reality.

Popular CNN Architectures

Several deep learning algorithms based on CNNs have been developed to improve performance. ResNet introduces residual connections to train deeper networks, VGG uses uniform layers for simplicity, and MobileNet is optimized for mobile and embedded devices. These models continue to push the boundaries of image processing in AI.

Recurrent Neural Networks (RNNs) and Their Advanced Variants

Recurrent Neural Networks (RNNs) are deep learning algorithms that process sequential data. This makes them ideal for tasks that require memory of past inputs, such as speech recognition, language translation, and time-series forecasting. Unlike traditional neural networks, RNNs have loops that allow them to retain information over time.

How RNNs Work: Memory and Feedback Loops

- Sequential Processing – RNNs process data one step at a time, using previous outputs as inputs for the next step.

- Hidden States – These store past information, helping the model recognize patterns in sequences like text or speech.

- Backpropagation Through Time (BPTT) – A unique training technique that adjusts weights based on past errors to improve learning.

Limitations of Standard RNNs

While RNN-based deep learning algorithms are powerful, they struggle with long-term dependencies due to vanishing gradients, where past information fades over time. This makes it difficult for standard RNNs to retain earlier inputs in long sequences.

Advanced Variants: LSTMs and GRUs

To address these limitations, more advanced architectures were developed:

- Long Short-Term Memory (LSTM) – Uses special memory cells and gates to retain long-term dependencies, improving text and speech processing.

- Gated Recurrent Units (GRU) – A simplified version of LSTMs that requires fewer parameters but enhances memory retention.

Applications of RNNs in AI

RNNs and their advanced variants are widely used in:

- Speech Recognition – Converting spoken words into text (e.g., Siri, Google Assistant).

- Machine Translation – Translating languages in real-time (e.g., Google Translate).

- Chatbots & Virtual Assistants – Powering AI-driven conversations (e.g., ChatGPT, customer service bots).

- Stock Market Prediction – Analyzing trends and forecasting future prices.

Generative Adversarial Networks (GANs) – AI for Content Creation

Generative Adversarial Networks (GANs) are deep learning models designed to create realistic data by learning from existing datasets. They are widely used in AI-generated images, videos, music, and deepfake technology. As a key advancement in machine learning, GANs consist of two competing neural networks—a generator and a discriminator—that refine the quality of generated content. This not only showcases the power of neural networks in artificial intelligence but also emphasizes how deep learning drives innovation in AI and why advanced models play a crucial role in modern AI systems.

How GANs Work: The Generator vs. Discriminator

GANs function through competition between two networks. The generator creates new data, such as an image or text, while the discriminator evaluates whether the data is real or fake. This adversarial process helps GANs refine their ability to generate high-quality content over time.

Challenges and Ethical Concerns

Despite their potential, GAN-based deep learning algorithms raise ethical concerns. Deepfake technology has sparked debates over misinformation and privacy. AI-generated content can also be misused for fraud, identity theft, or biased media. Ensuring ethical use of GANs requires responsible AI policies and detection methods.

Transformers – The Powerhouse of Natural Language Processing

Transformers have revolutionized natural language processing (NLP) by enabling AI systems to understand, generate, and accurately translate text. Unlike RNNs, transformer-based deep learning algorithms process entire sequences simultaneously, making them more efficient for handling large-scale text data. A notable example of their impact is OpenAI’s development of GPT-3, a transformer-based model with significantly advanced language modeling, enabling applications like chatbots and content creation tools.

How Transformers Work: The Self-Attention Mechanism

Transformers rely on a self-attention mechanism, which allows them to weigh the importance of different words in a sentence. This parallel processing makes transformers faster and more scalable, enabling them to handle complex language tasks like machine translation, text summarization, and sentiment analysis.

Popular Transformer Models and Their Uses

Several transformer-based deep learning algorithms have gained popularity:

- BERT (Bidirectional Encoder Representations from Transformers) – Improves text comprehension for search engines and chatbots.

- GPT (Generative Pre-trained Transformer) – Excels at generating human-like text and powers AI content creation.

- T5 (Text-to-Text Transfer Transformer) – Handles translation, summarization, and text classification.

Reinforcement Learning – Training AI Through Rewards and Penalties

Reinforcement Learning (RL) is a specialized branch of deep learning algorithms that enables AI systems to learn by interacting with their environment. Unlike supervised learning, which relies on labeled data, RL trains an AI agent through trial and error by receiving rewards for correct actions and penalties for mistakes. This technique is widely used in robotics, gaming, autonomous systems, and financial modeling.

How Reinforcement Learning Works

In RL, an AI agent takes actions to achieve a goal within an environment. Each action produces a reward or penalty, which helps the agent refine its strategy over time. The model continuously adjusts its decision-making policy to maximize future rewards, improving its ability to solve complex real-world problems.

Key Applications of Reinforcement Learning

RL-based deep learning algorithms have been successfully applied in various fields:

- Robotics – AI-powered robots learn to perform warehouse automation, assembly line operations, and autonomous navigation tasks.

- Gaming – RL has enabled AI to surpass human players in strategy games like Chess, Go, and Dota 2, showcasing superior decision-making.

- Autonomous Vehicles – Self-driving cars leverage RL to adapt to changing traffic conditions, improve safety, and make real-time driving decisions.

- Financial Trading – AI-driven trading algorithms optimize investment strategies by analyzing market trends and adjusting trades dynamically.

Challenges and Future Potential of RL

Despite its success, RL faces challenges such as high computational costs, extensive training time, and difficulty in environments with sparse rewards. However, advancements in deep reinforcement learning continue to enhance AI’s ability to self-learn and adapt, making it a crucial component of next-generation AI systems.

Conclusion

Deep learning algorithms are the backbone of modern AI, driving advancements in image recognition, language processing, AI-generated content, and autonomous decision-making. From CNNs and RNNs to GANs, transformers, and reinforcement learning, these models enable machines to learn and adapt efficiently.

As AI evolves, improvements in computing power and ethical AI development will shape its future. While challenges like bias and high resource consumption remain, responsible innovation can ensure AI benefits society. Understanding deep learning algorithms is key to unlocking AI’s full potential in solving real-world problems and driving technological progress.

Frequently Asked Questions (FAQs)

1. What are deep learning algorithms?

Deep learning algorithms are AI models that use artificial neural networks to process data, recognize patterns, and make intelligent decisions. These algorithms power applications like image recognition, natural language processing, and autonomous systems.

2. What are the most popular deep learning algorithms?

The most widely used deep learning algorithms include:

- Convolutional Neural Networks (CNNs) – Best for image and video processing.

- Recurrent Neural Networks (RNNs) – Used for sequential data like speech and text.

- Generative Adversarial Networks (GANs) – Used for AI-generated content and deepfake technology.

- Transformers – Powering advanced natural language processing (e.g., ChatGPT, BERT).

- Reinforcement Learning (RL) – Helps AI learn through rewards and penalties.

3. How do CNNs and RNNs differ?

CNNs are designed for image processing, using layers to detect patterns in visual data. RNNs, on the other hand, are optimized for sequential data, such as speech and text, where past inputs influence future outputs.

4. Why are transformers considered better than RNNs for NLP?

Transformers process entire text sequences simultaneously rather than step by step, like RNNs. This allows them to handle long-range dependencies more effectively, making them superior for tasks like machine translation, text summarization, and chatbot development.

5. What are the real-world applications of deep learning algorithms?

Deep learning algorithms power applications across various industries, such as:

- Healthcare – AI-driven diagnostics and medical imaging.

- Finance – Fraud detection and automated trading.

- Entertainment – AI-generated content and recommendation systems.

- Autonomous Vehicles – Object detection and driving decisions.

6. What are the challenges of deep learning algorithms?

Some key challenges include high computational requirements, significant data dependencies, ethical concerns like bias in AI, and the complexity of training deep networks. Researchers continue to develop more efficient models to address these issues.

7. How can I choose the correct deep-learning algorithm for my project?

The choice depends on your data type and problem:

- For images and videos, → Use CNNs.

- For text and speech, → Use RNNs or Transformers.

- For AI-generated content, → Use GANs.

- For decision-making tasks, → Use Reinforcement Learning.

8. What is the future of deep learning algorithms?

The future of deep learning will focus on more efficient models, reduced energy consumption, better interpretability, and ethical AI development. Emerging technologies like self-supervised learning, neuromorphic computing, and AI transparency will further shape AI’s evolution.